Documentation

/ User's manual in Python

:

The TRun sub-classes deals with the use of computer resources. Three modes are available:

TSequentialRun: evaluations are computed sequentially on a single computer core.TThreadedRun: evaluations are computed using the computer multi-core resources. It uses the pthread library with the shared memory paradigm. Using this runner prevents from using some assessor, as one should take care of memory conflict.TMpiRun: evaluations are computed using a network of computers (usually multi-core) It uses the message passing interface (MPI) library with a distributed memory paradigm.

If you run on a single node, you can use MPI or threads. MPI parallelisation is more expensive, but more generic (no thread safe problem).

Warning

Disregarding the chosen solution to distribute the computation as long as it is parallelised (meaning whether one is

choosing thread or MPI) the number of allocated ressources (in the constructor or specify to the mpirun

command) should always be strickly greater than 1. CPU number 1 will always be the "master" that is dealing with the

distribution to its "slaves" and the gathering of all results.

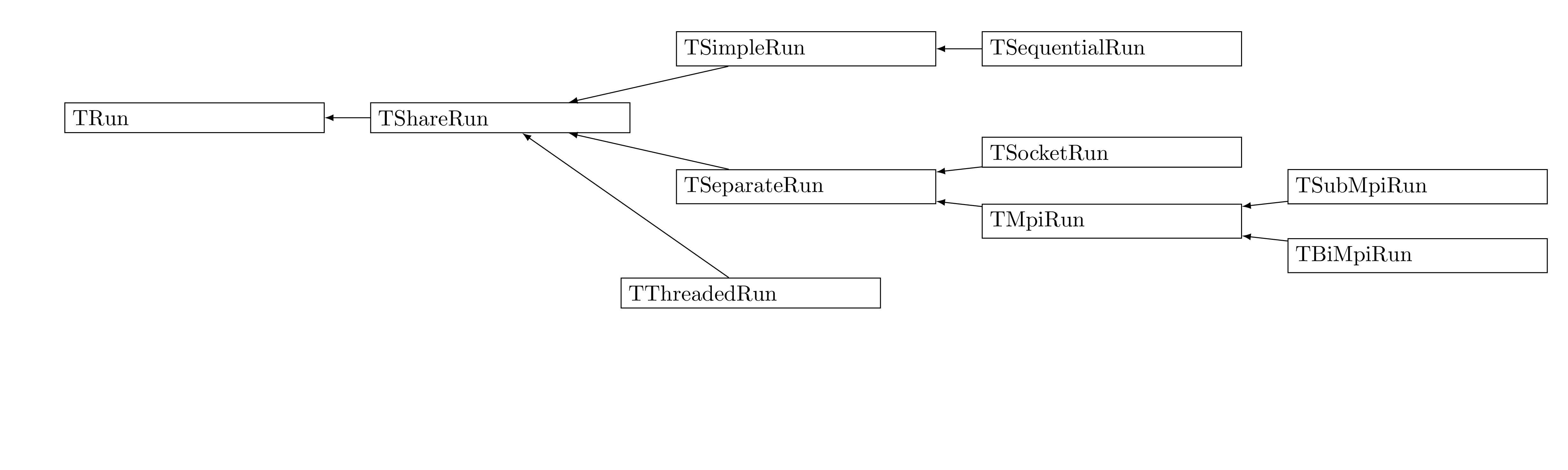

The runner class hierarchy is smaller than the assessor one, as can be seen in Figure VIII.3. It starts with the TRun class, which is a pure virtual one in

which few methods are given along with an integer to describe the number of CPUs.

In this case, there is no distribution. If evaluations are fast, it remains the simplest way to run the evaluations. Here is the interpretation of the inherited methods:

startSlave: exits immediately,onMaster: tests is trueand

stopSlave: cleansTEval.

TSequentialRun constructor only has one argument, a pointer to a TEval

object.

# Creating the sequential runner

srun = Relauncher.TSequentialRun(code)

In this case, the program starts using a single resource (the main thread), then it launches evaluation on dedicated threads (children), uses them and stops them before ending.

Threads use a shared memory paradigm: all threads have access to the same address space. All objects that are used are defined by the main thread. Evaluation threads only use (or duplicate) them. It's only the main thread that follows the macro instructions, while its children only do the evaluation loop. Here is the interpretation of the inherited methods:

startSlavestarts some threads dedicated to evaluation (it is a unblocking operation), and then exits. These threads loops for evaluations.As we are on the master thread,

onMasteris true.stopSlaveputs fake items for evaluation. When the thread gets it, it stops their evaluation loop and exits. Main thread waits for all threads to be stopped.

TThreadedRun constructor has two arguments, a pointer to a TEval object

and an integer. The second argument is the number of threads that the user wants to use.

# Creating the threaded runner

trun = Relauncher.TThreadedRun(code,4)

One important thing to take care is that the user evaluation function need to be thread safe. For example, with the old ROOT5 interpreter, the rosenbrock macro (see Section VIII.2) cannot be distributed with thread. This is because the user function is interpreted and the Root interpreter is not thread safe. You have to turn it in a compiled format to make it works with threads.

Thread safe problems come usually with variable affectation. If two (or more) threads modify the same memory address at the same time, the code expected behaviour is usually disturbed. It can be a global or static variable, an embedded object working variable, a file descriptor, etc. Thread unsafe bug is difficult to squash. It may be necessary to clone objects to avoid such problems.

Warning

One might want to useTDataServer objects in code of TCJitEval

instances that would be distributed with a TThreadedRun object. In this case, it is mandatory

to call the method EnableThreadSafety() to remove all dataserver and tree from the internal

ROOT register which would induce race-condition. This can be done as below:

import ROOT

ROOT.EnableThreadSafety()

In this case, many processes are started on different nodes. MPI uses the distributed memory paradigm: each process

have is own address space. All processes run the same macro and define their own objects. If you create a big

object in the evaluation/master code section, all processes allocate it (this is why, generally, the main dataserver

object is created in the onMaster part to prevent from creating as many dataserver as there

are slaves).

the constructor calls

MPI_Initfor the initial process synchronisation. This step is automatical, as long as one is running through the on-the-fly C++ compilator thanks to therootcommand or in python. .startSlaveeither exits immediately for the master process (id=0) or starts evaluation loop for other ones.depending if we are on the master process or not,

onMasteris true or false.stopSlaveputs fake items for evaluation and then exits. Evaluation processes get it, stop their loop, exit fromstartslave, and usually jump the master bloc instructions. Unlike threads, the master process is not waiting for evaluation processes.the destructor calls

MPI_Finalizefor the final process synchronisation. In the specific case of python, where ROOT and python (though the garbage collector approach) are arguing to destroy objects, a specific line (ROOT.SetOwnership(run, True)) has to be added, as discussed in Section XIV.9.6.2.

TMpiRun constructor has one argument, a pointer to a TEval object.

# Creating the threaded runner

mrun = Relauncher.TMpiRun(code)

To run a macro in a MPI context, you have to use the mpirun command. Here is a simple way to run

our example:

mpirun -n 8 python RosenbrockMacro.py

Here, we launch root on 8 cores (-n 8). The mpirun command has other options

not mentioned here.

In general, one runs a MPI job on a cluster with a batch scheduler. The previous command is put in a shell script with batch scheduler parameters. The ROOT macro does not use viewer, but saves results in a file. They will be analysed in a post interactive session using all the ROOT facilities.

Warning

The TMpiRun implementation requires also at least 2 cores (one being the master and the other

one the core on which assessors are run). If only one core is provided, the loop will run infinitely.

In some case, users want to use multi level of parallelism. Two examples are given in the use cases section : first

one is an optimization where each evaluation realizes an experiment design and launchs many evaluations and

returns a overview of values (max, min, mean) ; second one uses an MPI function

(TPythonEval) for evaluation.

For a two level MPI, two classes are provided : TBiMpiRun and

TSubMpiRun. TBiMpirun is the high level class and splits MPI

resources in different parts : one ressource for the TMaster and n resources for each

TEval. TSubMpiRun gives acces to the n ressources reserved for

evaluation. For example with 16 resources, 1 resource is reserved for the master and the rest can be splited in 3

parts of 5 resources each for evaluation. TBiMpiRun got an extra parameter, an

int defining the number of each evaluation resource. This number must be compatible with available

resources (with 16 resources, it could be only 3 or 5).